TDD enables us to deliver better designed, cleaner and more extensible code. Being agile means frequent code changes and refactoring; TDD helps us keep quality up during these iterations and ensures very little or no rework once the code goes live.

Red > Green > Refactor– the test-driven development “mantra” used by developers that focuses on writing only the code necessary to pass tests. The concept of this development style is to 1) reduce errors caused by incorrectly guessing that an implementation will behave as expected, and 2) reduce bugs caused by adding new functionality to existing code. In delivering better designed and cleaner and more extensible code I have decided to share my thoughts and findings on some of the challenges and benefits afforded by this development style.

What is TDD?

TDD is a software development process that relies on a short iterative cycle:

- Red – write a failing test that implements a new feature.

- Green – write just enough production code to make the test pass, fake it if necessary but remember you will need to refactor this later.

- Refactor the production code to improve the design until it has reached an acceptable standard.

- Repeat until there are no new tests or the design is optimal.

Understanding TDD and what to test

- Write acceptance tests that correspond to a user story (feature) and scenarios. In this way the expected behaviour of the software is tested.

- Write unit tests to test individual components of an acceptance test. Keep a “to do” list of all child tests when writing the acceptance test, and implement them individually.

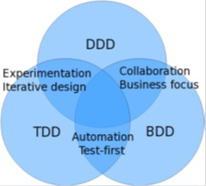

- Both acceptance and unit tests should be written in the style of a BDD scenario, i.e. given some context or some input, when an action or event occurs, then assert for the expected outcome.

- For bug fixing, write a test to prove the bug and then write the production code to fix the bug.

- Tests should be written against the API or domain layers, as this is where the behaviour exists. For example, if your software is written using the MVC (Model-View-Controller) or MVP (Model-View-Presenter) pattern, then the tests should be written against the Controller or Presenter. Aslam Khan has a very nice reference card on DZone on ‘Getting started with Domain Driven Design’.

At KRS we prefer nUnit for our desktop and web API C# Visual Studio projects, as well as a combination of Chutzpah , Jasmine or QUnit, and PhantomJS for our JavaScript web apps.

We also use tSQLt to test database logic.

If the software is written using stored procedures containing the domain logic, then tests should be written at that level.

A Good test…

- Should correspond to a scenario and only test one thing.

- Is Maintainable – remember that tests can also be refactored.

Resharper is a great productivity tool and especially useful when refactoring.

- Should be written in the ubiquitous language of the domain.

- Is repeatable and produces the same results every time, all the time.

- Is independent, i.e. it must not depend on any other tests and can be run in any order. Each test uses its own test data.

- Provides failure messages with asserts.

- Is isolated and shouldn’t be too dependent on infrastructures such as the network, file system, web services, message queues, environment variables, system dates, etc…

- Is run end-to-end, i.e. from the API/domain layers right through to the persistence mechanism. This is the only way to prove that everything works. Try to avoid mocking your persistence and keep your test data up to date.

- Does its own housekeeping, i.e. data that is setup is also cleaned up, either per test or per test suite. Nothing is left behind.

- Is executable by a CI server (e.g. Jenkins).

- Is run continuously during all development.

What to avoid

- Try to avoid dependencies on infrastructure. While some might be unavoidable, such as the database, these need to be kept to a minimum. Use fakes, mocks and stubs to minimise dependencies.

- Don’t write tests with code coverage as a goal. The tests must be meaningful as maintenance could become an issue.

- If the test hasn’t gone green in the last few minutes it means you’re spending too much time refactoring before re-running the test.

- Watch out if you are spending a lot of time using the debugger – maybe the test is doing too much or is too complicated?

Dealing with legacy code

Legacy code has usually been written without tests and it’s difficult to write tests on code that hasn’t previously been written with testability in mind.

- Check the logicial complexity and the dependency levels of the code to make decisions on how to start writing tests.

- If no API or domain layers are apparent, find the seams or entry points and write tests against/using those.

- The same rules for new features apply for these tests – write a test for scenarios to ensure behaviour is tested. And don’t forget to keep your “to do” list.

The benefits from TDD are huge and have enabled us to write simpler and better designed code. Being agile means frequent code changes and refactoring; TDD helps us keep quality up during these iterations and ensures very little or no rework once the code goes live.

About the Author:

Paul Johnson is the Technology Director as KRS. Paul has been with KRS for 15 years. When he is not overseeing the software quality and technological direction at KRS, he can be found at home creating delicious food or driving his “Scooby”.

Authors Notes:

Aslam Khan is well known for his contributions in the Agile community and corporate IT in improving software development. He both introduced and coached us on TDD and is currently collaborating with KRS on our latest Advanced Agile Developer course offering as well as designing Single Page Web applications.

Feature image courtesy of Hashnode